The epidemic in early 2020 swept the world, and the performance industry was greatly impacted. Many musicians and entertainment companies began to explore online concert methods. Compared with offline performances, there are many differences in the form of online live broadcasts. How to use technology to bring the relationship between audiences and performers has become a new topic. In order to better enhance the audience’s sense of participation, the students of INSA HdF have some new ideas. More than 20 graduate students in the audio and video field from the school formed a group called Tempos Event and decided to add some interactive parts to the online concert. The author serves as the head of interactive technology in this group.

1. Interactive Design

As an experimental interactive performance project, the interactive design of this project is not complicated. Specifically, when the audience watching the live broadcast appears in a specific camera position, clicking on a specific area on the screen will cause the background effect to change and the lighting and video to change during the live broadcast. To give a simple example, whether the whole party will officially start from the warm-up program depends on whether a sufficient proportion of interactive users click on a specific area in the live screen in the first interactive scene. When the number of clicks meets a certain percentage, the burst animation and lighting effects rendered in real time on the background will ignite the live broadcast. There will also be different forms of interaction in the subsequent links, as well as the results of the interaction.

2. Technical realization

The technology of the interactive part mainly includes three parts: the front-end, the middle layer and the back-end. The front-end refers to the online live page and the interactive elements in the page; the back-end refers to the software for real-time rendering and the software for playing common materials. ; The middle layer is the bridge connecting the front end and the back end, how to trigger the corresponding real-time rendering according to the interactive design plan of the creative director.

2.1 Analysis of front-end technology

The online live broadcast page is mainly composed of HTML5+CSS3+JavaScript technology. Except for the necessary explanatory text, the main part is the video playback window of the embedded third-party live broadcast platform, and the interactive elements suspended on the upper layer of the window. Since the rendering of interactive elements is closely related to the live content, and different interactive elements need to be rendered in different programs, Ajax asynchronous data response is essential. The viewer’s browser will request the server for the current cue point mark at a fixed rate. When the cue point changes, it means that a new program has started or an interactive session in the same program starts or ends. At this time, the browser requests new interactive elements from the server and renders them on top of the live video. During the actual live broadcast, these cue points are manually updated by the creative team based on the progress of the live performance.

When the audience clicks or hovers above the interactive element, when the trigger conditions are met, the audience will be given a direct trigger feedback animation on the browser screen, and at the same time, a successful trigger record will be added to the MySQL database of the website through Ajax. Trigger records from numerous users are processed by data analysis programs within the website and generate statistics for each interaction. At this point, the work of the website at the front end of the system is over, and the statistical data will be acquired by the middle layer.

2.2 Back-end technology analysis

The back-end part of this project mainly refers to the realization of video and various audio lighting effects. The part of the video is divided into two categories, one is the real-time rendering video using vvvv software, and the other is the video material produced in advance played through the Millumin software. Four projectors were used on the scene, projecting on the three facades of the curtain and the white scene in front of it, and using the built-in Mapping function of the Millumin software to model and project the curtain area and the white scene. The video rendered in real time by the vvvv software is used as an input source of Millumin, and this software method is used to replace the hardware control system like Barco Event Master E2 and the player system like WatchOut.

vvvv is an open source real-time rendering system that builds a real-time rendering workflow in a graphical way, and is an artist-friendly way to build real-time rendering programs. It can import external sources, such as video or sequence frames, or create images with its own particle, gravity, or fluid systems. In this project, most of the real-time rendering images related to interactive data required by the creative team used the software’s own particle system.

2.3 Analysis of middle layer technology

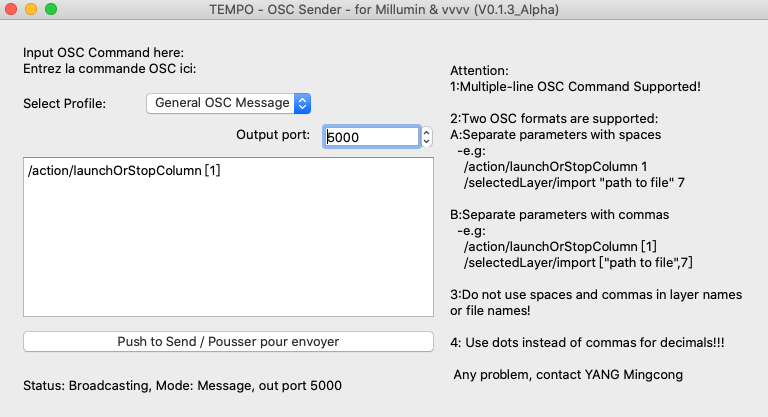

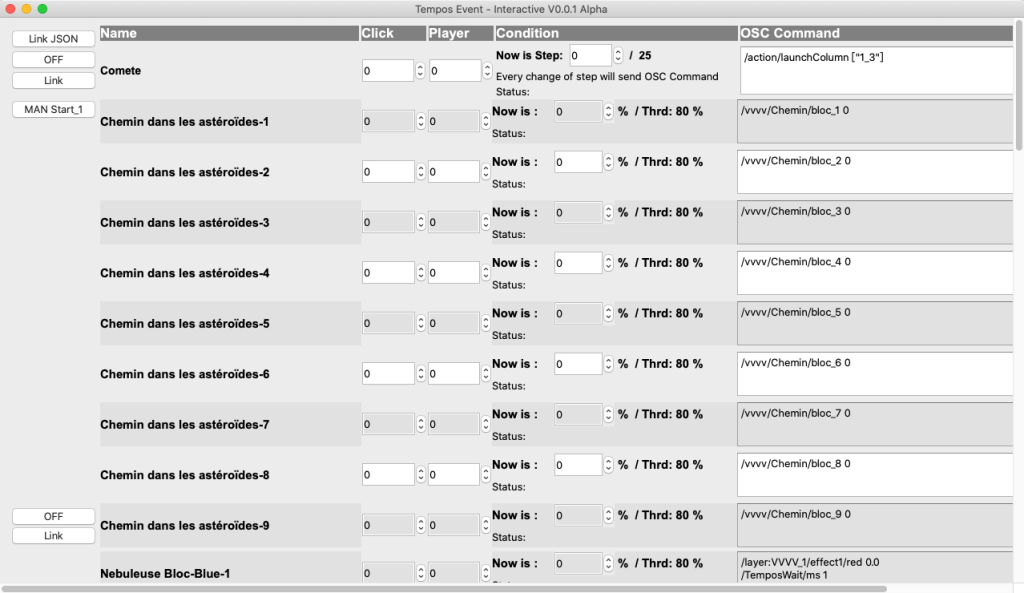

The middle layer is completely developed from scratch. The front end has mature web page and framework technology, and the back end has formed software. How to open up the communication between the website and professional software is the key work of the middle layer. At the beginning of the project, having an open control protocol (such as MIDI/OSC, etc.) was the bottom line set by the interactive technical team, so the creative team considered whether it could support receiving external control when choosing video control software and real-time rendering software. After selecting vvvv and Millumin software, the interactive technology team studied the Open Sound Control protocol (ie OSC) received by these two software. Considering that vvvv and Millumin are software running on Windows and macOS respectively, the interactive technology team considered platform compatibility issues during development, and created a series of gadgets that send OSC protocols based on Qt’s cross-platform technology. We enumerated all the OSC commands that may be involved in controlling vvvv and Millumin software, and made a simple manual, asking the creative team to use self-developed gadgets for control testing.

After the control test is completed, it means that the communication between the middle layer and the back end is open. Considering that the data in the front section is based on the network, there is a certain risk of failure, so we give priority to the development of the manual control terminal – that is, manual access to participation The number of interaction and the number of clicked interaction elements are displayed one by one on the middle layer control software. Subsequently, item-by-item interaction statistics in JSON format are generated through the website and read at a fixed frequency in the middle-tier software, with manual and automatic switching functions. Under the premise of ensuring security, the online front-end and offline back-end can be connected through the self-developed middle-layer software.

Want to learn more? Contact us !